Prequisites

- VPN for Potsdam University set up and working.

- Requested and granted access from the HPC Cluster. This means I assume you have managed to connect with ssh or something similar to the cluster.

- (for the scripts to work comfy) a way to use ssh without a password (GPG or using ssh-keygen for example).

Containerization (docker) in a Nutshell

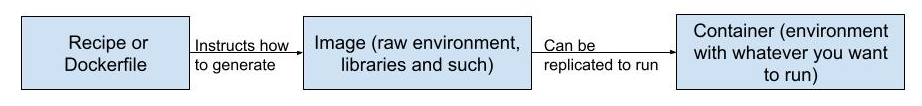

If you google what Containerization is, I am certain you will find many tutorials that go over the documentation without any kind of abstract explanation, which is just not that helpful. This is a better video. Let’s see it in a simple drawing:

We can think of recipes as “how can I have a virtual machine that runs things on its own”. We can think of images as a virtual machine “created”. But we don’t want to have to re-do that virtual machine every time to run every separate program right? So we create containers for specfic applications.

In the context of what I will be showing, the rationale for containers is more that we will do small modifications to our python code, and building an image every time would be unnecessarily and costly. We only need to rebuild the image when we are using different libraries (or different base images of course).

A minimal working example

The example can be found at https://github.com/xarxaxdev/example_apptainer.

I go in this section over the relevant parts; you may want to first look at the section “How to work with the example” and then modify the parts of the code you want.

Project-relevant code:

generate_model.py -> Python main code: whatever you are trying to run with this tutorial. I kind of followed an existing tutorial in huggingface; feel fry to modify for whatever NLP task you want to do.

requirements.txt -> Python libraries I needed for the main code of generate_model.py

Container and cluster-relevant code

slurm.job -> Defines the general parameters for running a task in slurm. Relevant lines I modified:

#SBATCH --partition=gpu-> We want to use the partitions (machines) that have a GPU#SBATCH --gpus=1-> How many gpus to use. You dont need more than 1.#SBATCH --chdir=.-> This is where you want to run this job,"."means the folder you are currently in. This is why in this example you will run things from/work/username/projectname#SBATCH --time=4-11:59-> I set the time to run the scrip to the max possible.apptainer run --nv img.sif-> This is the command that actually runs the container we have created.img.sifis the image we will be generating.

recipe.def -> This is where we have the set of instructions that will be used to build the image that we will use for running the containers.Let’s go over the sections it has:

-

Bootstrap: docker From: pytorch/pytorch

We want to prepare the environment (Bootstrap) for docker-like images. pytorch/pytorch is a specific image for docker; we will use it as base, install some libraries and define the main script. You can find other images in https://hub.docker.com/

-

%files generate_model.py . requirements.txt .

This makes the 2 files available in "." (the main folder of the image).

-

export PATH="$PATH:"

We want to use the current folder, so we define the PATH of the image as evaluating(using $) the current PATH

-

%post echo "Installing required packages..." pip3 install -r requirements.txt

Once we are done cloning the base image, the %post will be run to customize the image before it is saved.

-

%runscript echo "running main script" python3 generate_model.py

The main script that will run when we run the image in a container.

alias that just made my life easier

You will find them in : readme.md. It is just 4 variables and 3 aliases that make working locally, uploading the code and rerunning it simpler. You may want to add them in your .bashrc . The alias are:

alias ssh_uni="ssh -X $CLUSTER_LOGIN"alias update_example_apptainer="rsync -av -e ssh --exclude='*.pyc' --exclude='.git' --exclude='*/generated_models/*' $HOME/$project $CLUSTER_LOGIN:$PATH_IN_CLUSTER "alias reverse_update_example_apptainer="rsync -av -e ssh --exclude='*.pyc' --exclude='.git*' --exclude='*generate_model.py' --exclude='*.sif' --exclude='*.bin' --exclude='*.pt' $CLUSTER_LOGIN:$PATH_IN_CLUSTER/$project $HOME "

How to work with the example

- Clone from github. Would recommend doing so in

/home/username. - Customize code, aliases and variables:

- Change “yourusername”

- Change “/example_apptainer” for whichever folder you stored it in. You may have to tinker the alias if it’s not in

/home/username - You should also change

generate_model.pyfor your own code andrequirements.txtfor the libraries you need.

update_example_apptainerto upload the code from the folder project to the uni cluster’s working directory.ssh_unito access the cluster; then navigate to your working folder/work/username/example_apptainer- Now build the image:

apptainer build img.sif recipe.def. This is slow. - Now

img.sifis in your folder; you could run the image withapptainer run --nv img.sif. Instead use the clusterslurmsystem and runsbatch slurm.job. Wait for the job to be complete or fail. - Close ssh connection and

reverse_update_example_apptainerto get the results from your cluster.- The job run successfully, good job!

- The job run failed; you should check the

slurm-*jobid*.outto see what is wrong.- Need to modify

requirements.txtand install new libraries? You should do so, then go to step 3 without skipping any steps. - You only needed to modify

generate_model.py? You should do so, then go to step 3 but you can skip step 5.

- Need to modify

If this was helpful to you consider dropping a star on https://github.com/xarxaxdev/example_apptainer or donating. I am planning to keep this website as a personal blog and to document my projects.